Wearable vision devices to better monitor patients’ daily self-care tasks

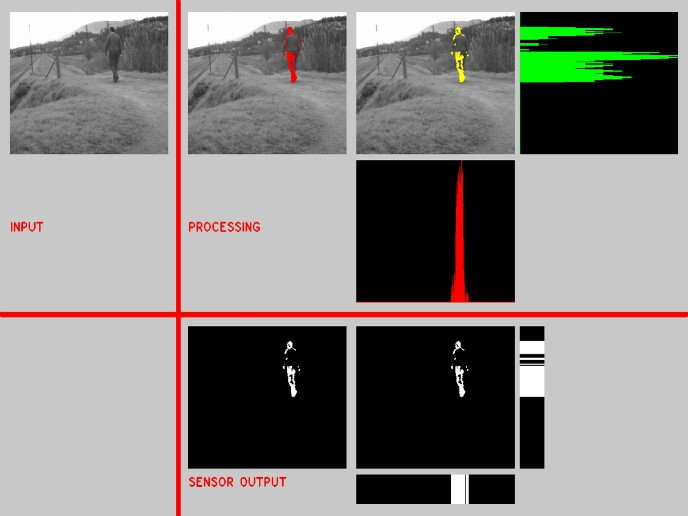

Clinicians observe and evaluate patients that perform everyday hand-object interactions for diagnosis and assessment. Egocentric (first-person) vision systems with cameras mounted on patients are considered the optimal tool for monitoring and evaluating ADL. This enabling technology would allow for long-term monitoring and potentially capture as much information about a patient’s activities as a network of surveillance cameras. With this in mind, the EU-funded EGOVISION4HEALTH(opens in new window) (Assessing activities of daily living from a wearable RGB-D camera for in-home health care applications) project explored new egocentric computer vision techniques to automatically provide healthcare professionals with an assessment of their patients’ abilities to manipulate objects and perform ADL. Project partners introduced the use of depth (RGB-D) cameras as a new wearable device. They analysed object manipulation and ADL using detailed 3D models of the hands and upper and full body. Specifically, researchers developed a new computer vision-based method that estimates the 3D pose of an individual’s arms and hands from a chest-mounted depth camera in real time. They then analysed functional object manipulations during ADL and explored the problem of contact and force prediction from perceptual cues. Lastly, the EGOVISION4HEALTH team successfully tackled the more complex problem of full-body 3D pose estimation in RGB images, achieving excellent outcomes. It artificially augmented a data set of real images with new synthetic images, and showed that convolutional neural networks (CNNs) can be trained on artificial images. The team’s end-to-end CNN classifier for 3D pose estimation outperforms state-of-the-art results in terms of 3D pose estimation in controlled environments. EGOVISION4HEALTH proposed the use of wearable RGB-D cameras and advanced existing knowledge on hand and object detection in first-person views. Practitioners such as occupational, rehabilitation and geriatric therapists will be better able to assess the functional ability or inability of their patients to perform ADL.