Extracting the signal in the noise: support for the hearing impaired

Humans are barraged by a cacophony of sounds throughout the day. Even in these sound-rich environments, they are able to localise the direction of a behaviourally relevant sound source – like a friend’s voice across a crowded room – with remarkable accuracy. We know little about the neural mechanisms underlying the computation of the 3D location of real-life, complex sounds in noisy environments. As a result, cochlear implants (CIs) do a poor job of filtering the signal in the noise. This leaves the hearing impaired at a disadvantage and reduces their quality of life, including employment opportunities. With the support of the Marie Skłodowska-Curie Actions programme (MSCA), the SOLOC project used computational and experimental methods to gain insight into the brain mechanisms of sound localisation. This will be used to develop signal processing strategies for CIs that can simulate human sound localisation behaviour.

The signal-to-noise problem with cochlear implants

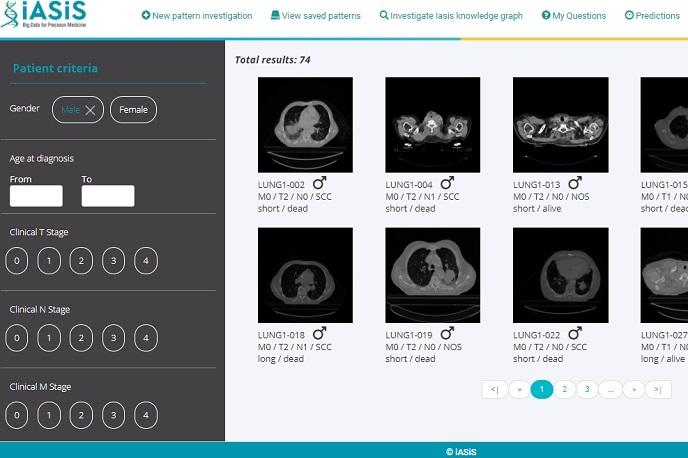

According to Kiki van der Heijden, MSCA fellow at project coordinator the Donders Institute for Brain, Cognition and Behaviour at Radboud University(opens in new window), “due to technical limitations of CIs, crucial temporal and spectral information is lost when auditory signals are transmitted to the auditory nerve of the CI user as a series of pulses. The resulting degraded representation of the listening scene is insufficient for the brain to filter sound sources of interest and suppress background noise.” The success of CIs in perceiving speech in a silent background does not translate to successful speech-in-noise perception. “Research needs to focus on real-life sounds in ecologically valid listening scenes to optimise sound processing strategies for assistive technology. Thus, a critical component of the project was the creation of a database of spatialised, real-life sounds that will be made public to support further research in neuroscience, audition and computational modelling,” van der Heijden notes.

Neurobiological-inspired convolutional neural networks support feature extraction

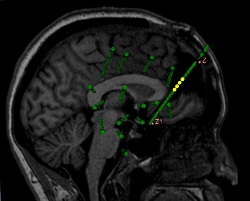

The project harnessed its database and AI to understand sound processing in real-life listening scenes, knowledge which will be used to optimise the sound processing strategies of CIs. Deep neural networks (DNNs) are algorithms trained to learn a representation of data at increasing levels of abstraction – or increasing ‘depth’ – like processing in the brain. This allows them to perform complex, high-dimensional tasks such as image identification or sound localisation. Deep learning has become the gold standard among machine learning algorithms. Convolutional neural networks (CNNs) are a specific and very successful type of DNN that extract key features from the data through a series of convolutional operations. “We developed a neurobiological-inspired CNN trained on real-life sounds from our database. We showed(opens in new window) that it can successfully simulate human sound localisation behaviour. We also used invasive intracranial recordings in neurosurgical patients listening to sound mixtures with two spatially separated talkers to gain insight into speech-in-noise processing in the brain,” states van der Heijden.

From CNNs to CIs: A step-change in sound localisation for the hearing impaired

The project’s success confirms that multidisciplinary research projects at the interface of neuroscience, computational modelling and clinical research are crucial for the development of new assistive technology. SOLOC delivered biologically and ecologically valid computational models of spatial sound processing in the human brain. They will support the optimisation of algorithms maximising the availability of spatial cues for hearing impaired listeners, strengthening CIs’ bridge between the brain and the environment.