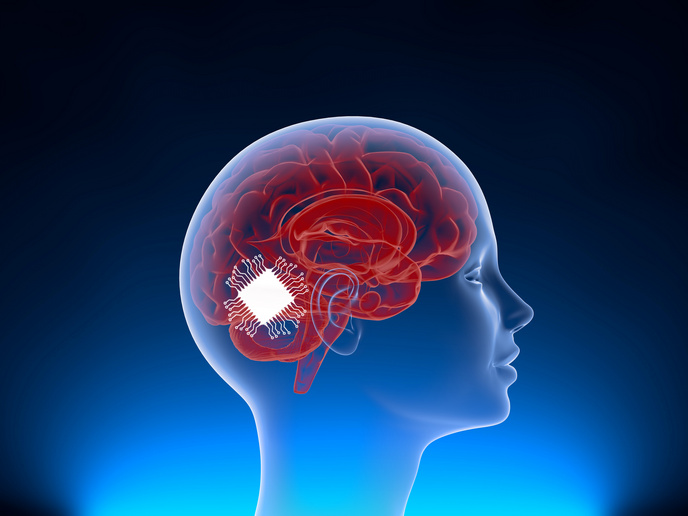

A speech brain-computer interface

Aphasia(opens in new window) is an acquired disorder of speech that emerges from degeneration or damage in the brain areas that control speech due to injury or stroke. Such loss of communication with others has invalidating psychological consequences for the individual and their family. Restoring speech in aphasic patients requires a neuroprosthetic device known as a brain-computer interface(opens in new window) (BCI) that can read the brain signals related to their intentional speech. Although BCIs for hearing augmentation exist in the form of cochlear implants, the technology for restoring speech loss lags behind.

Novel technology for predicting speech

The key objective of the EU-funded BrainCom(opens in new window) project was to advance rehabilitation solutions for restoring speech and communication capabilities in disabled patients, using innovative technologies that can decode neural activity and predict continuous speech. The human brain has an astonishing complexity, consisting of as many as 100 billion neurons. To fully understand the underlying principles of such a convoluted system requires the simultaneous detection of the electrical activity of large neural populations with a high spatial and temporal resolution. Existing technologies, such as functional magnetic resonance imaging or electroencephalography, are relatively effective at indicating the sorts of neural signals relevant to speech. However, they are too slow to be used in a realistic situation such as conversation. Electrocorticography(opens in new window) offers an attractive approach for monitoring brain activity by placing electrodes on the surface of the brain where high resolution electrical activity can be read very quickly.

Graphene sensors advance electrocorticography neural interfaces

Scientists took advantage of the unique electronic properties of graphene to build flexible arrays of a type of sensors known as transistors capable of detecting neural activity over large areas of the brain. Thanks to these graphene transistors and their multiplexing operation, it was possible to build arrays with thousands of recording sensors. Graphene sensors were reduced in size to the dimension of about one single neuron while maintaining high signal quality. The idea was to place these sensors in important regions of the cortex known to be related to speech. With the use of sophisticated algorithms, the BrainCom team extracted brain data and predicted speech production with high accuracy. “When users of this technology vividly imagine that they are saying words in their head, the brain fires similar patterns of activity as if they were actually saying them out loud,” explains project coordinator Jose Antonio Garrido. The BrainCom real-time processing technology will turn the possibility of externalising non-articulated inner speech into reality for language compromised users. Phonemes, words, sentences and dialogue will be able to be produced from accurately decoded neural signals.

Technology transfer into industry

The next step is to upscale the production of the BrainCom neural interfaces and test their performance in human clinical trials. Multi Channel Systems(opens in new window) has a plan to launch the graphene neural sensor technology in the market of preclinical neuroscience within 2022. The INBRAIN Neuroelectronics(opens in new window) medical technology company will conduct the first human clinical trials to demonstrate the safety and functionality of the BrainCom graphene technology. BrainCom innovations advance the field of clinical neuroscience and the potential applications of implantable neural interfaces. Apart from speech restoration the generated technology can be used for rehabilitation of cognitive functions as well as for epilepsy monitoring.