Processing spoken language

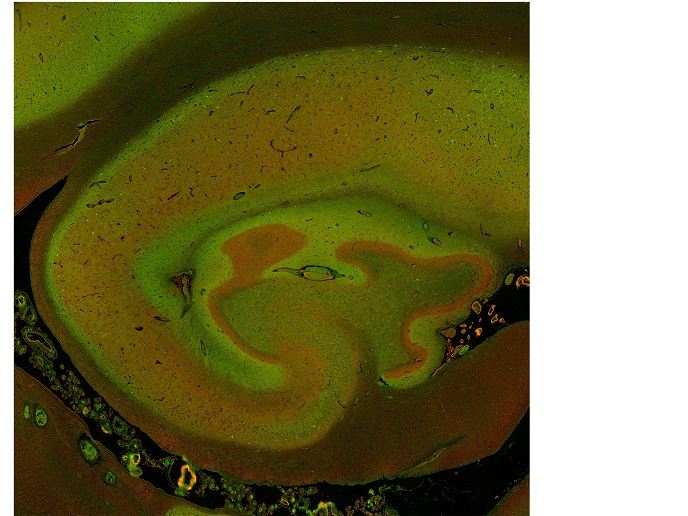

Researchers, in conjunction with the Basque Centre on Cognition, Brain and Language (BCBL), launched the project 'Prediction in speech perception and spoken word recognition' (PSPSWR) designed to expand our knowledge of how speech understanding works. PSPSWR began with the hypothesis that as people listen to spoken language, they make predictions about the content based on their knowledge of their native language. The team developed a series of experiments using Basque to test this hypothesis. The first study involved asking participants to listen to Basque pseudowords and respond when they heard a sibilant ('s'-like) sound for which they were monitoring. The three conditions were match (same point of articulation), mismatch (a different point of articulation) and control (non-sibilant). Researchers found that mismatch items showed longer reaction times than match or control items, suggesting that listeners are sensitive to these complex patterns and use their knowledge of the phonological system to parse the signal in real time. In a follow-up, participants were again presented with pseudowords, while their on-going brain activity was recorded using electroencephalography (EEG). The findings demonstrate that auditory cortex is sensitive to such complex patterns, as mismatch items elicited the largest response just 75 ms after hearing the relevant sibilant sound over fronto-central electrode sites. In short, the series of studies carried in the current project indicate that the brain processes information differently when it can predict future speech versus when it cannot, shedding new light on understanding the predictive mechanisms of how we understand language.